Supervision from Context

Examples

Ideas & Goals

- Learn word representations from context in sentence

- Allow accurate syntactic & semantic arithmetic [1]

- Create comprehensive test set for regularities

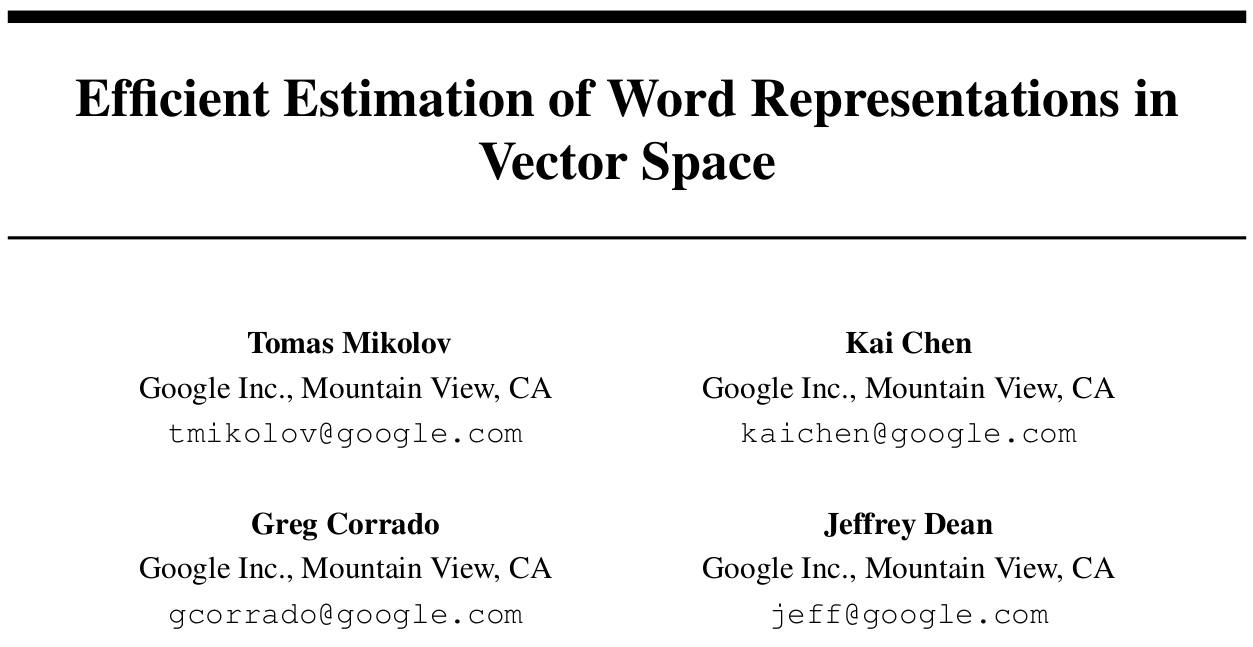

Semantic & Syntactic Relations in Test

![]()

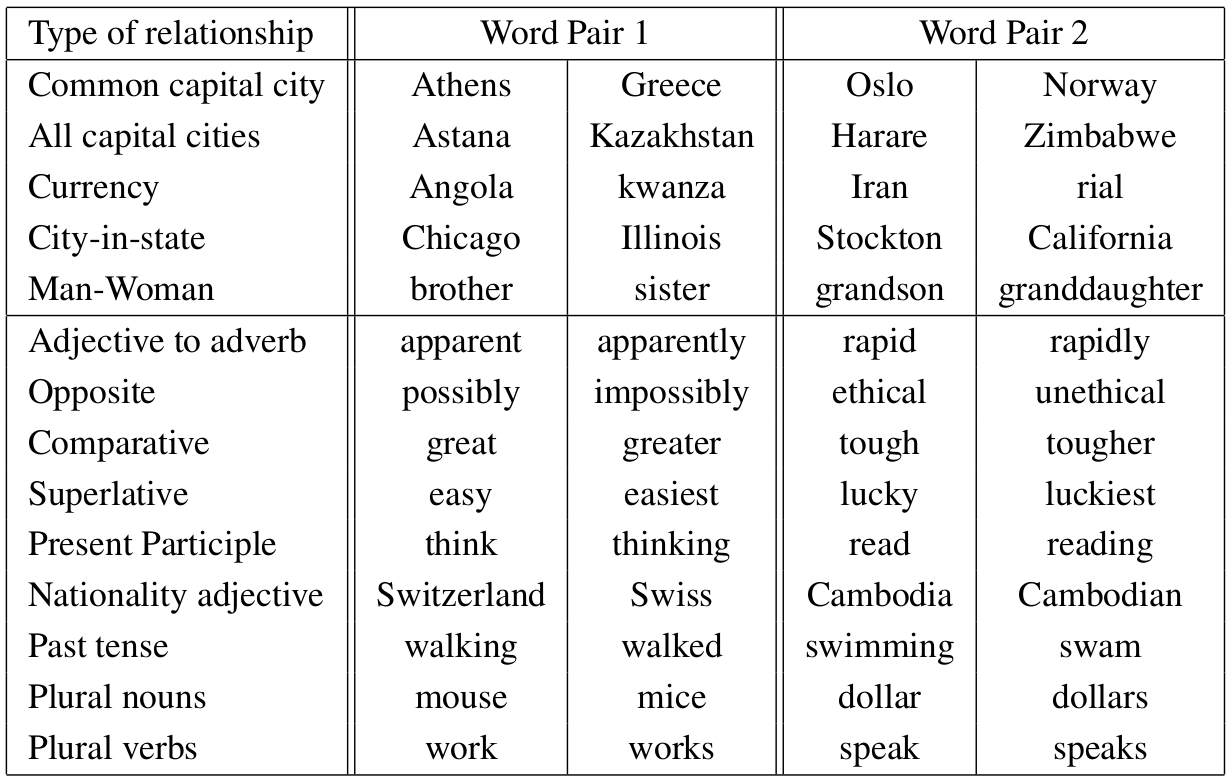

Proposed Architectures

![]()

- CBOW = Continuous Bag-of-Words

- CBOW shares projection matrices

- Words are one-hot vocabulary vectors

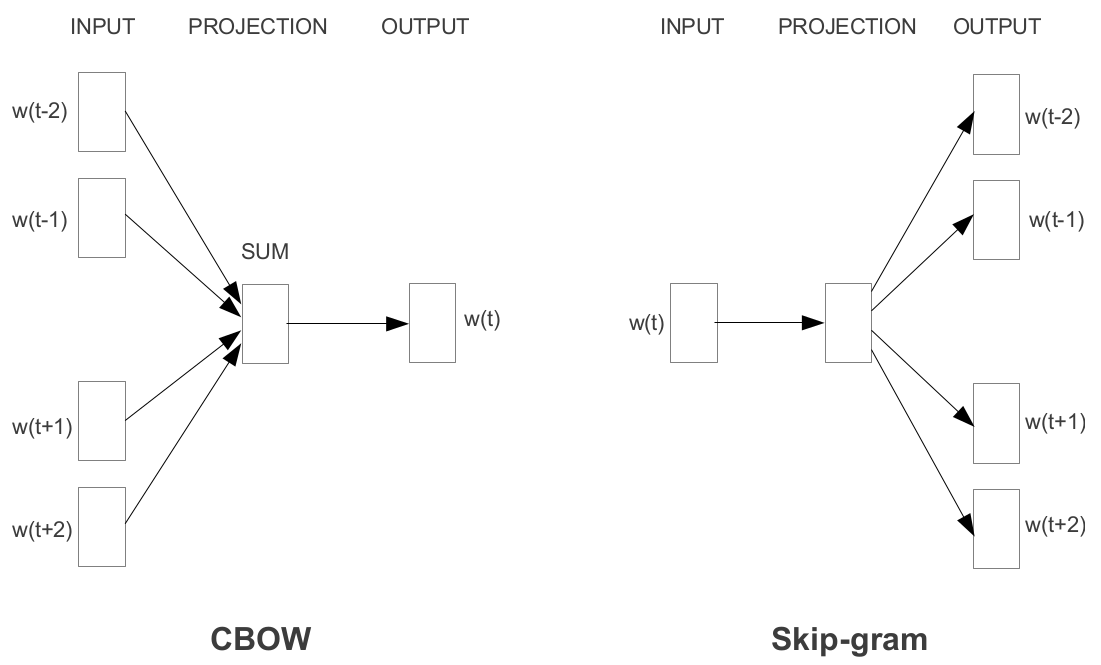

Results

![]()

Ideas & Goals

- Leverage intra-image correlations for learning

- Improve "unsupervised" pre-training

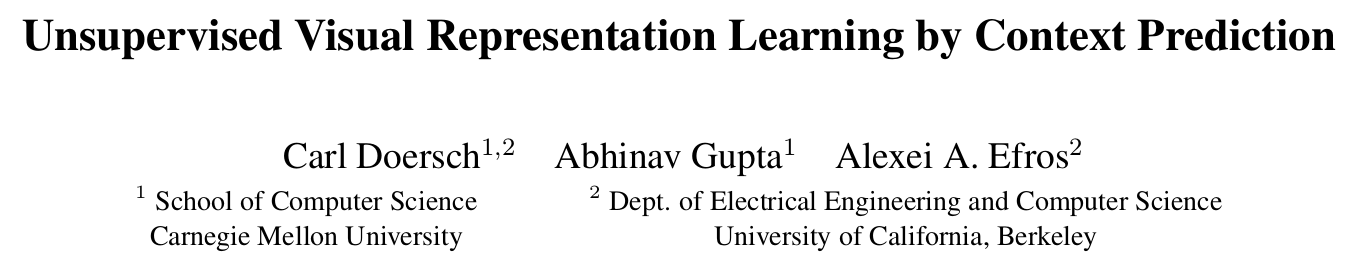

Context Classification

![]()

- Predict relative position of second patch

- Random variation and offset to not learn trivial relations

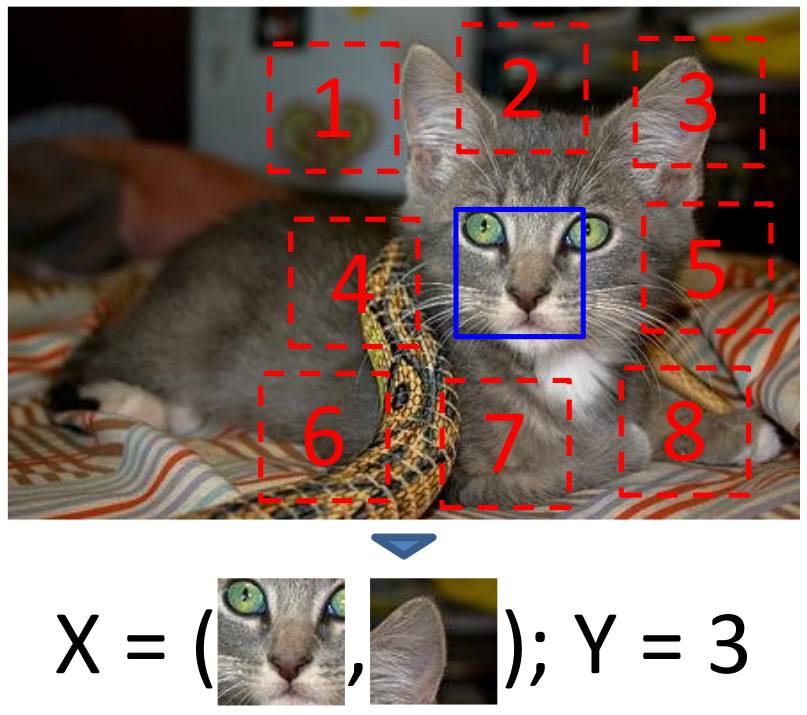

Architecture

![]()

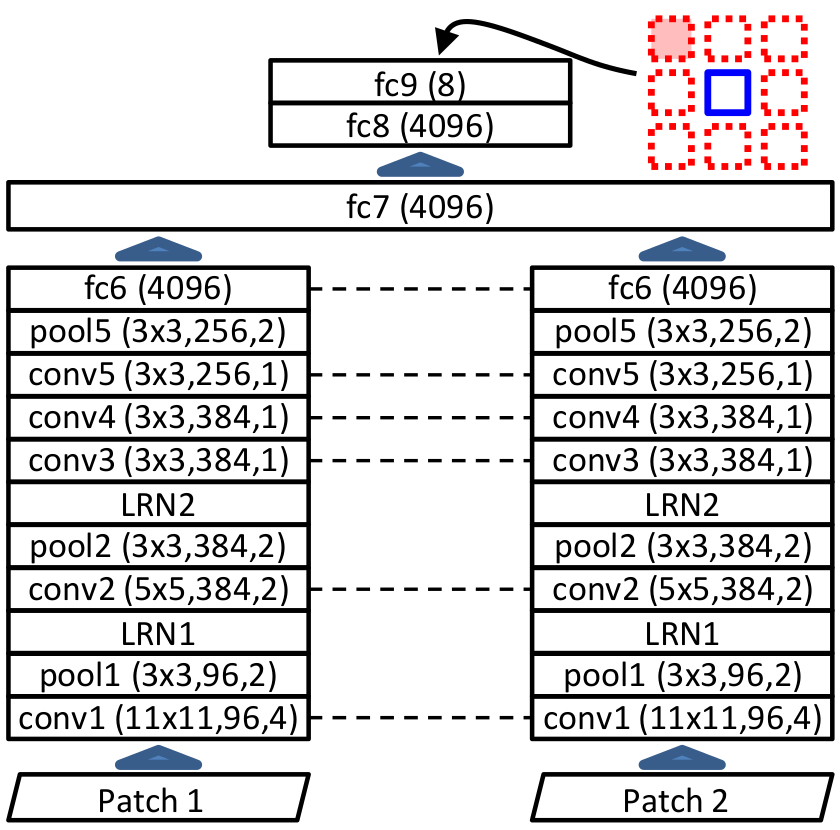

Nearest-Neighbour Examples

![]()

- Representation from fc6 layer

- NN via normalized cross-correlation

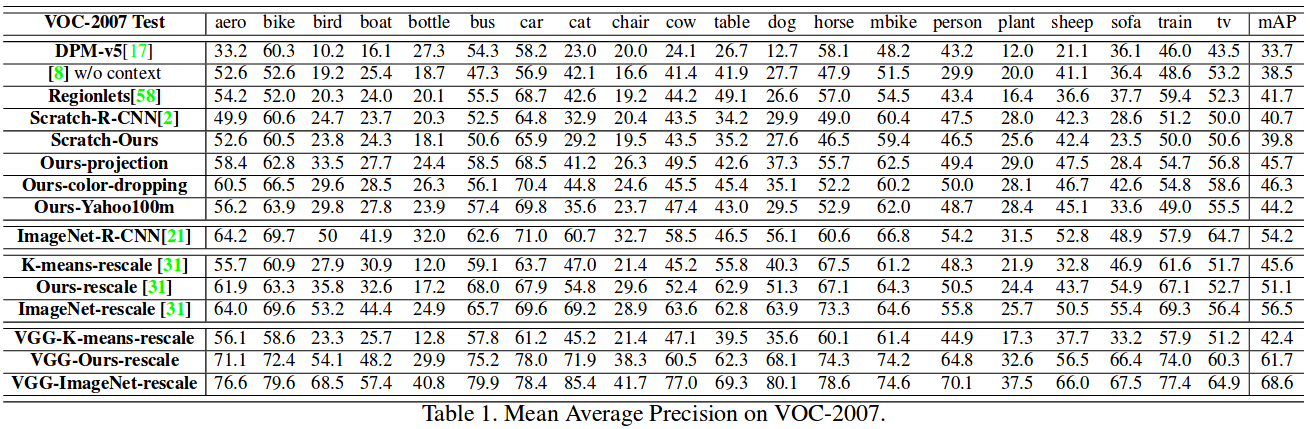

Results (VOC-2007)

![]()

Findings

- Not as good as supervised pre-training (ImageNet)

- Better than existing unsupervised pre-training

- Representation still not ideal (chromatic aberration)

Transformation Invariance

Example

Ideas & Goals

- Use images from different angles to predict camera motion

- Find representation useful for higher level tasks

SF and KITTI dataset examples

![]()

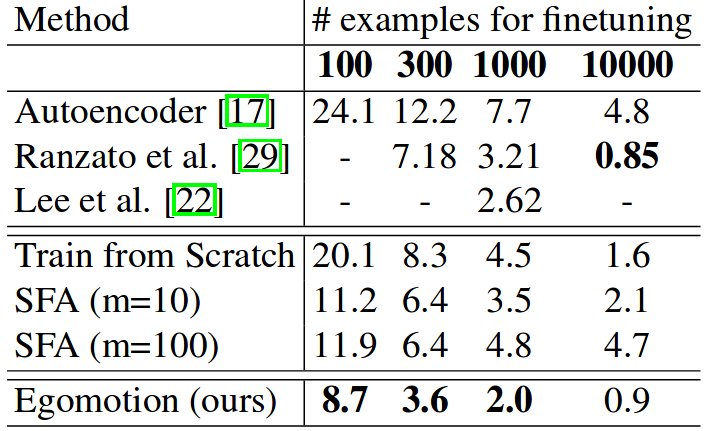

MNIST Results: Random Transformations

![]()

- Random translation & rotation $\rightarrow$ classify transformation

- Simple siamese network w/ varying base net

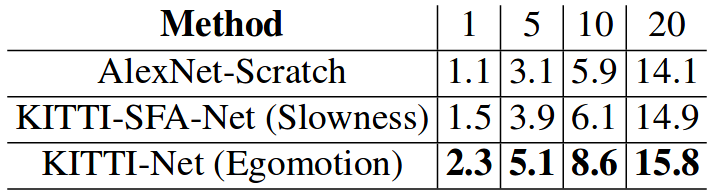

ILSVRC-12 Top-5 Accuracy

![]()

- Base architecture similar to AlexNet

- Also good results for keypoint matching & odometry

- (1, 5, 10, 20) examples per class finetuning

Environment Exploration

Example